How to cache frequent data using AWS ElastiCache, Lambda, API Gateway with Terraform and Serverless

Caching frequently accessed data can significantly improve the performance and efficiency of your applications. In this article, we will demonstrate how to use AWS ElastiCache Redis in conjunction with AWS Lambda and API Gateway, all managed through Terraform and the Serverless framework. This guide aims to provide a comprehensive solution to a common business challenge by integrating multiple AWS services. Additionally, it reflects the knowledge I gained while preparing for the AWS Solutions Architect exam.

Table of contents

- Introduction

- Development

- Conclusion

Introduction

In this section we will theroetical basics and terms need to understand to make solution to cache frequent data with lambda

A serverless framework is a black box way of using different AWS services together, achieving the maximum of the software. We don’t need to set up things and trigger actions manually. Everything is done in the code and services are handled on the payed-per-use approach.

AWS Lambda represents a single executable code unit-specific only to just one functionality. It is run without thinking about servers and paid only for the compute time that the Lambda function consumes.

Terraform is an open-source infrastructure as code software tool that provides a consistent CLI workflow to manage hundreds of cloud services. It allows you to define both cloud and on-prem resources in human-readable configuration files that you can version, reuse, and share. Terraform handles the complexity of resource dependencies and orchestration, ensuring that your infrastructure is provisioned in the correct order.

Amazon API Gateway is a fully managed service that makes it easy for developers to create, publish, maintain, monitor, and secure APIs at any scale. It acts as a “front door” for applications to access data, business logic, or functionality from your backend services. API Gateway handles all the tasks involved in accepting and processing up to hundreds of thousands of concurrent API calls, including traffic management, authorization and access control, monitoring, and API version management.

Amazon Virtual Private Cloud (VPC) is a logically isolated virtual network within the AWS cloud where you can launch AWS resources in a secure environment. It lets us provision a logically isolated section of the AWS cloud where you can launch AWS resources in a virtual network that you define. You have complete control over your virtual networking environment, including selection of your own IP address range, creation of subnets, and configuration of route tables and network gateways. VPC provides advanced security features such as security groups and network access control lists to help you secure your resources.

AWS Systems Manager (SSM) is a collection of capabilities that helps you automate management tasks such as collecting system inventory, applying operating system patches, automating the creation of Amazon Machine Images (AMIs), and configuring operating systems (OSs) and applications at scale. SSM provides a unified user interface so you can view operational data from multiple AWS services and automate operational tasks across your AWS resources.

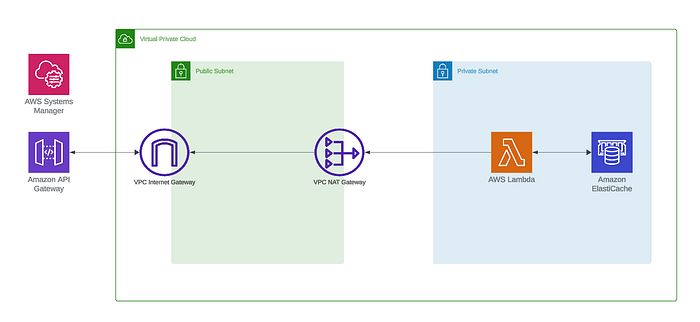

To run Lambda and Elasticache in AWS together, they should be placed in a custom VPC related to security, network configuration and resource management, similar is to another database services such as RDS and DocumentDB.

ElastiCache

- Security and Isolation: Running ElastiCache within a VPC allows you to isolate your cache clusters from the public internet, enhancing security. You can control access to your cache nodes using VPC security groups.

- Network Configuration: A VPC provides fine-grained control over network settings, such as IP address ranges, subnets, and routing tables. This control is crucial for managing network traffic and ensuring that your cache nodes are accessible only to authorized resources.

- Compliance: Many organizations have compliance requirements that mandate the use of private networks for sensitive data. Using a VPC helps meet these requirements by keeping data within a controlled environment.

Lambda

- Access to Private Resources: Lambda functions often need to access resources that are only available within a VPC, such as RDS databases, ElastiCache clusters, or other services that are not exposed to the public.

- Security: By running Lambda functions within a VPC, you can leverage VPC security groups and network ACLs to control inbound and outbound traffic, ensuring that your functions can only communicate with authorized resources.

- Network Configuration: Simialr to ElastiCache, running Lambda functions within a VPC allows you to manage network settings and ensure that your functions can communicate with other VPC resources efficiently.

By default, Lambda functions have access to the public internet. This is not the case after they have been configured with access to the VPC. If we continue to need access to resources to the internet we need to set up a NAT instance or Amazon NAT Gateway.

Here is the explanation of the all terms used in the terraform file:

- VPC (Virtual Private Cloud): A VPC is a logically isolated virtual network within the AWS cloud where you can launch AWS resources in a secure environment.

- Subnet: A subnet is a range of IP addresses within a VPC that allows you to segment your network and deploy resources in specific Availability Zones.

- CIDR (Classless Inter-Domain Routing): CIDR is a method for allocating IP addresses and IP routing, represented in the format x.y.z.t/p, where p indicates the number of bits used for the network prefix.

- Security Group: A security group acts as a virtual firewall for your AWS resources, controlling inbound and outbound traffic based on specified rules.

- Ingress: Ingress refers to the rules that control incoming traffic to your AWS resources within a security group.

- Egress: Egress refers to the rules that control outgoing traffic from your AWS resources within a security group.

- Internet Gateway: A horizontally scaled, redundant, and highly available VPC component that allows communication between instances in your VPC and the internet.

- Nat Gateway: A managed service that enables instances in a private subnet to connect to the internet or other AWS services, but prevents the internet from initiating connections with those.

- Route Table: A set of rules, called routes, that determine where network traffic from your subnet or gateway is directed.

- Network ACL: A stateless firewall that controls inbound and outbound traffic at the subnet level.

Development

Before we start we should be sure that we have our AWS credentials exported on our machine. We will use eu-central-1 for the AWS region.

In this article we are using serverless version 3.35.2so we should install exactly that one:

npm install -g serverless@3.35.2First, let’s create a new serverless project using the next command:

serverless create --template aws-nodejs --name node-elasticache-example --path node-elasticache-exampleWe have created a new repository node-elasticache-example with two main files handler.js and serverless.yml.

handler.jscontains lambda function that should be executed after the calling.serverless.ymlfile contains configuration about our services, such as environment variables, roles, events to run lambda, and external services used.

We will explain it in details later, but first let’s explain main things related to infrsatructure and prepare it using terraform.

For the infrastructure, we will go with IaC (infrastructure as a code) approach, and we are using terraform. One of prerequisite is to have terraform installed on the machine. We will just create a role and security group for the lambda using serverless framework and serverless.yml file.

We will use AWS SSM for the storing confidential environement variables such as vpcId, subnetId , elasticacheEndpoint .

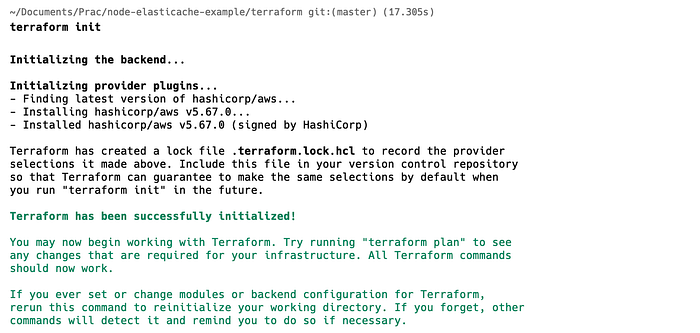

We will start with terraform initialization.

terraform init

We are using this command to initialize terraform state.

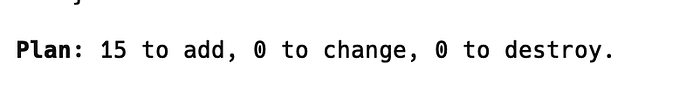

terraform plan

This command is used to plan changes that should be executed. And the last one is applying these changes:

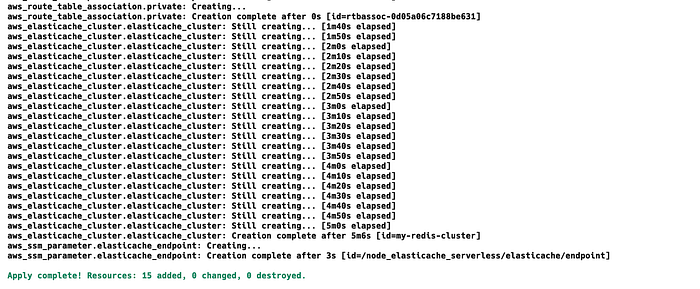

terraform apply --auto-approve

Adding auto-approve keyword, we are avoiding writing yes for the approval of the command.

At the end, we can always destroy infrastructure changes with the next command:

terraform destroy --auto-approveIn the next part we will explain aws lambda cappabilities, triggers, etc.

First part provider shows information related to runtime, environment variables, and role possibilities. We enabled HTTP APItriggering using API Gateway . As we mentioned this lambda is a part of the private subnet (this can be seen in the diagram). Also there isa security group for our lambda, which enables all eggress traffic.

To have a production ready project we need to init node project and automatically have package.json file.

npm init -yWe need to install ioredis package for redis.

npm install ioredisIn handler.js file we can see the logic of reading data from cache and writing data there.

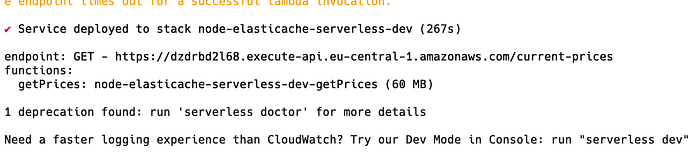

We are deploying our serverless application using:

sls deploy

After successfull calling our endpoint we can see the response:

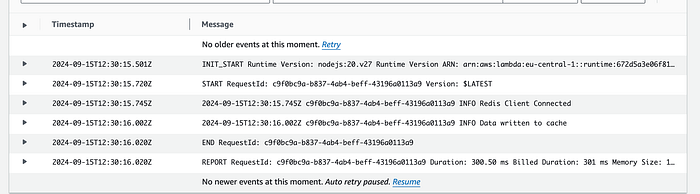

In the first execution we can see that data was get from the endpoint we provided:

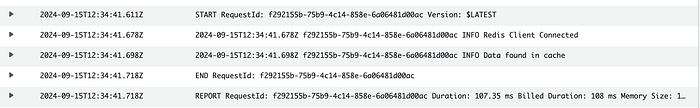

In the second executin we can see that data was read from cache:

At the end we can remove our serverless application.

sls remove --stage dev --region eu-central-1Full code can be found in the next link.

Note: At the end of the example destroy serverless application and infrastructure using terraform to not waste money.

Conclusion

By following this guide, you have learned how to set up a robust caching mechanism using AWS ElastiCache Redis, Lambda, and API Gateway, all orchestrated with Terraform and the Serverless framework. This setup not only enhances the performance of your applications by reducing latency but also ensures a scalable and secure environment. Leveraging these AWS services together allows you to build efficient, production-ready solutions that can handle frequent data retrieval seamlessly.

Benefits

- Comprehensive Integration

The article provides a detailed guide on integrating multiple AWS services (ElastiCache Redis, Lambda, API Gateway, SSM) using Terraform and the Serverless framework, offering a holistic approach to solving a common business challenge.

2. Scalability and Reliability

The solution outlined in the article ensures that your application can scale efficiently and remain reliable, even under high load, by leveraging AWS’s managed services.

Drawbacks

- Cost

Hourly Charges: NAT Gateways incur an hourly charge rate for each hour they are running. This can add up, especially if we have multiple NAT Gateways across different Availability Zones.

Data Processing Fees: There is a data processing fee for each gigabyte of data that passes through the NAT Gatway. This can become significan if you are transferring large amounts of data.

2. Complexity

Integrating multiple AWS services (ElastiCache Redis, Lambda, API Gateway) and managing them with Terraform and the Serverless framework can be complex and may require a steep learning curve for those unfamiliar with these technologies.

Future work

- Add CI/CD to create/update infrastructure and deploy application automatically.

- Provide different solutions for the same use case.